Robotic Manipulation

Robotic Manipulation

Robotic manipulation refers to the ways robots interact with the objects around them: grasping an object, opening a door, packing an order into a box, folding laundry… All these actions require robots to plan and control the motion of their hands and arms in an intelligent way. At Leeds, our research focuses on developing such algorithms and systems.

We focus on robots manipulating objects in unstructured human environment, such as our homes, as well as robots working on manufacturing and assembly tasks.

Algorithms for Manipulation Planning

Imagine opening the fridge to get a coke can out; you notice there are some beer cans in front of it on the shelf; you nudge the beer to the side with your hand to create the necessary space, and then get the coke can out. How do you decide where to contact the beer can? How do you predict how it will move as a result of your contact? How do you know how much force to apply? These are all questions robots also need to answer if we want them to perform intelligent manipulation. At Leeds, we are developing intelligent algorithms to enable them to do that.

Kinematics of Prehension

Prehension - the ability to grasp and manipulate objects - is one of the most fundamental of human skills. Expert manual interaction with an object requires the actor to move their hand to the object of interest (the precontact phase), and then apply the appropriate fingertip forces in order to manipulate the object (the contact phase). The physical properties of the object clearly impact upon the fingertip forces required for manipulation (higher forces needed when surface contact is slippery). The object properties (how far it is from the body, its size etc) also affect the forces required and the accuracy requirements for reaching-and-grasping.Our research is revealing the lawful changes that occur in human prehension as the properties of an object alter. We can then use these rules to determine the nature of the control signals that are used to control a robotic hand. Our team have created simulations that allow us to generate human-like movement trajectories on the basis of relatively straightforward control signals. These signals can then be used to control different robotic arms.

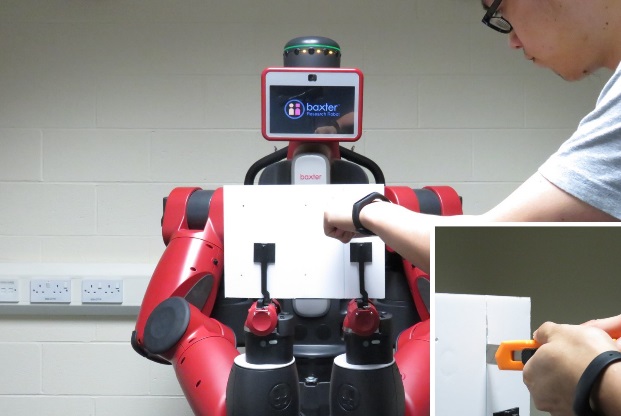

Human-Robot Collaborative Manipulation

Imagine coming back home from a hardware store with planks of wood and working with your friend to manufacture a table for yourself. You will need to collaborate to perform operations such as cutting parts off, inserting nails, drilling holes, and screwing in fasteners. We get robots to help humans perform similar tasks. To do this, the robot needs to decide how to grasp the workpieces (e.g. wood planks) and how to move to perform these operations. At Leeds, we develop planning algorithms that make such decisions.

Human-to-Robot Skill Transfer for Reaching-to-Grasping

Our work is also exploring the decisions that humans make when reaching-to-grasp objects. Humans are able to rapidly select the best way to grasp an object (e.g. pick up a glass from the side or the top) and decide how to navigate their hand around obstacles within the workspace. These are problems that our current robot systems cannot solve. We use Virtual Reality systems to explore how humans decide on the optimal way to grasp an object, and this research allows us to identify control strategies that we can then use when trying to control robotic systems.

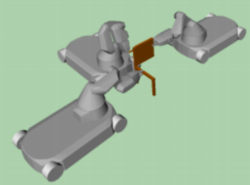

Multi-Robot Collaborative Manipulation for Assembly

Just like humans, multiple robots need to collaborate for certain manipulation actions too. Whether it is transporting a large and heavy object, or tasks such as drilling, cutting, polishing, and assembly, multiple robots need to reason about how they collaborate with each other. At Leeds, our research focuses on increasing the capabilities of collaborative robots particularly for manufacturing tasks.