Robotic Perception

Robotic Perception

Academic Contact: Dr Mehmet Dogar

Academic Staff: Dr Duygu Sarikaya, Dr Shufan Yang, Dr Mehmet Dogar, Dr Andy Bulpitt, Dr Des McLernon, Professor Anthony Cohn, Professor David Hogg, Professor Mark Mon-Williams, Professor Netta Cohen

Our work focuses on activity analysis from video, with fundamental research on categorisation, tracking, segmentation and motion modelling, through to the application of this research in several areas. Part of the work is exploring the integration of vision within a broader cognitive framework that includes audition, language, action, and reasoning.

Physics-based object tracking: Tracking an object in clutter using a camera when a robot is manipulating it is particularly difficult, due to the cluttering objects and, inevitably, the robot hand blocking the view of the camera. In our work, we use robot controls information, during non-prehensile manipulation, to integrate physics-based predictions of the object motion with the camera view. The result is a smooth and consistent tracking of the object even under heavy occlusions.

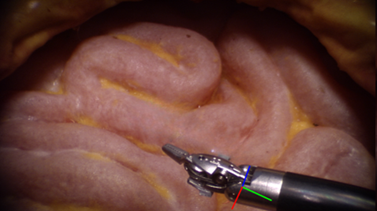

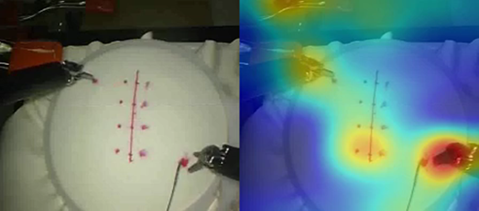

Surgical Vision and Perception, Situation Awareness in Surgery: Surgical robotic tools have been giving surgeons a helping hand for years. While they provide great control, precision, and flexibility to the surgeons, they don’t yet address the cognitive assistance needs in the operating theatre. We are on the verge of a new wave of innovations in artificial intelligence-powered surgical theatre technologies, and surgery is increasingly becoming data-driven. We believe that situation awareness is a key step towards automation in surgery, and we envision situation-aware operating theatre technologies that are able to use data to perceive their environment, comprehend ongoing activities and processes, project outcomes of a number of possible actions, and complement the surgical team by providing real-time guidance during complex tasks and unexpected events. The recent advances in computer vision and machine learning, combined with surgical knowledge representation can answer these needs. We explore how we can reduce the problem of situation awareness in surgery to a set of computer vision problems. Keywords: surgical activity recognition, surgical workflow modelling, surgical video captioning, surgical tool detection and tracking, segmentation of anatomical regions, 6DoF pose estimation

6 DoF Surgical Tool Pose Estimation

Using Human Gaze for Surgical Activity Recognition

Activity monitoring and recovery: Most of the existing approaches for activity recognition are designed to perform after-the-fact classification of activities after fully observing videos of single activity. Moreover, such systems usually expect that the same number of people or objects are observed over the entire activity whilst in realistic scenarios often people and objects enter/leave the scene while activity is going on. There are three main objectives of our activity recognition approach: 1) to recognise the current event from a short observation period (typically two seconds); 2) to anticipate the most probable event that follows on from the current event; 3) to recognise activity deviations.

Seeing to Learn: Observation Learning in Robotics: Seeing to learn (S2l) is an ambitious computer vision-robotics project currently ongoing at University of Leeds, which is aimed at developing advanced observation learning methods for robotics systems. It addresses the inability of current robotic systems to learn from human demonstrations. The project envisions a future where robots could acquire new skills by just observing humans perform a task or even by watching online tutorial videos of demonstrations. In future the robots equipped with these learning methods could be applied in several real world conditions ranging from home to work environments such as construction sites where it could learn to perform the relevant tasks of drilling holes, hammering nails or screwing a bolt just by observing other workers.

Unsupervised activity analysis: Our goal is to learn conceptual models of human activity from observation by a mobile robot given minimal guidance. The ultimate aim is to enable a robot and people to have a shared understanding of what is occuring in the environment. This is a prerequisite for the robot to become a useful assistant.

Tracking objects: This work applies local and global constraints in an optimisation procedure where the interpretation of the tracks (local constraints) and events (global constraints) mutually influence each other. We build a model in terms of the person-object relationship over time which focuses on the carry event. Closed contours which are approximately convex are detected as potential carried objects and form a set of initial object detections. A high level interpretation of which objects are carried when and by whom is computed from high confidence object detections. The current high level interpretation induces a set of object tracks. Confidence estimates on object detections are changed based on the current object tracks. The high level interpretation from above is repeated until convergence.